What is driving the next era of computing? Since the dawn of the digital revolution, humanity has witnessed extraordinary advances. However, among all the innovations, there is one that stands out as the future of computing: the cloud.

In this article, we will explore the trajectory of computing, from the visionary visions of Joseph Licklider in 1960 to the present day, where the cloud has become a fundamental part of the technological landscape. Discover how cloud computing, predicted 64 years ago, has developed and taken its place in global business operations, driving a revolution in the way we use and access computing resources.

The future of computing is in the cloud

Many of humanity’s inventions have excited and driven civilization forward, and everyone has their own list of the most important inventions. But there’s one that’s probably on almost every list: computing. Its history is too long to tell here, but its benefits were recognized from a very early age.

In March 1960, a computer scientist called Joseph Licklider published an article in the scientific journal IRE Transactions called“Man-Computer Symbiosis“. In the article, he described a complementary (“symbiotic”) relationship between humans and computers at some point in the future. Now we know that this future has arrived and that computing is part of virtually everything we do. We are in symbiosis with it and computing is omnipresent.

This was due to several factors. Firstly, because of the development of technologies that made it possible to distribute computer resources among several users simultaneously. Also due to the progressive cheapening of these resources (storage, processing, telecommunications). Finally, due to the emergence of companies offering these services to the global market, cloud computing has become an accessible and affordable solution for everyone.

Cloud computing was predicted 64 years ago

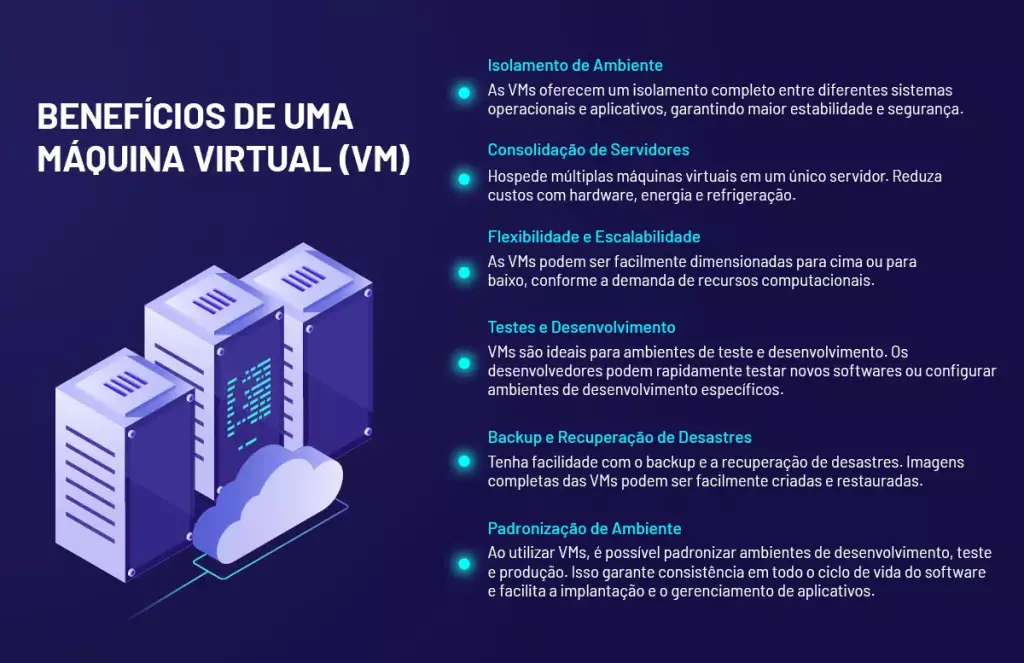

Even though Licklider showed in his article some of the benefits of distributed computing, it was impossible to predict how advanced these services would be. For example, in a few seconds you can create a virtual machine (VM), a super-fast computer with everything an individual or a corporation needs. However, it is a virtual machine, i.e. a virtual computer.

Other scientists at the time, such as John Backus – the inventor of the FORTRAN language – had already predicted that it would be possible to share time on a large computer, so that it could be used as if it were a collection of several smaller computers. Just like VMs.

In the time between then and now, computing has developed and expanded to offer resources to more and more people. Time-sharing has evolved into virtual machines, which are complete computers for whatever purpose the user wishes.

Affordable hardware and evolving networks

In the same period, two phenomena occurred: the cheapening of hardware and the evolution of telecommunications and network technologies. Approximately every two years, processors doubled in processing capacity, while their costs fell. At the same time, telecommunications have become increasingly digital, faster and cheaper.

All this combined has allowed large corporations to create data centers for their exclusive use. One of these corporations, which was developing data centers for its global e-commerce operations, had resources with on-demand elasticity in processing, memory and storage at its fingertips. In 2003, it concluded that it could offer these resources to developers and companies that preferred to use them remotely, rather than buying new computers. It was much cheaper.

These resources were somewhere on the Internet, and users couldn’t tell exactly where. This is how cloud computing was born, which the company began marketing in 2006. And so the first public cloud was created.

Cloud adoption has been progressive

The adoption of the cloud was not immediate, IT managers at the time used to say that processing in the cloud was simply processing and storing on other people’s computers. However, large corporations, such as banks, discovered that the cloud was a good solution and built their own: private clouds were born.

By also using public clouds for non-strategic operations, they have created what is known as a “hybrid cloud”. Little by little, more and more companies have joined the cloud computing model, spending on operations (OPEX) rather than capital (CAPEX), for various reasons, two of which are fundamental, because it was cheaper and because the cloud offers instant creation or expansion of resources, which does not exist in the traditional model.

Today, 18 years after the creation of the first public cloud, cloud computing has also evolved in terms of the number of followers, its usefulness and its technology: it has become the essential computing tool for thousands of established companies that need to expand their infrastructure, just as it is essential for a huge number of startups that, by adopting it, have been able to launch their ventures without investing fortunes in proprietary data centers.

Huge Networks Cloud is here

This year, Huge Networks is bringing its state-of-the-art cloud platform to these and other types of customers. Huge’s cloud is born with cutting-edge technology for processing, management, networking, service level and security, in a combination that is not usually found in any supplier. Despite this quality of resources, the service is priced fairly, transparently and with the best cost-benefit ratio in the sector.

Features include high-end hypervisors, state-of-the-art servers offering guaranteed performance and availability, backups and snapshots of virtual machines for restoration at any time. In addition to in-house defense against denial of service (DDoS) attacks of up to 40Gbps, with an SLA (service level agreement) of 99.99% availability, thanks to the infrastructure in tier 3 data centers. Pricing is transparent and presented in a public table, varying according to the number of CPUs contracted (four or eight), memory (from eight to 32 GB) and storage (from 140 to 420 GB).