On May 22, 2023, it will be 50 years since electronics engineer Robert Metcalfe wrote a 12-page memo to his colleagues at Xerox that would mark the birth of the Ethernet standard for data communication networks. In the memo, he described the main details of how the protocol worked, one of whose main virtues was transmission efficiency. The other, on the other hand, was a solution to get around packet collisions.

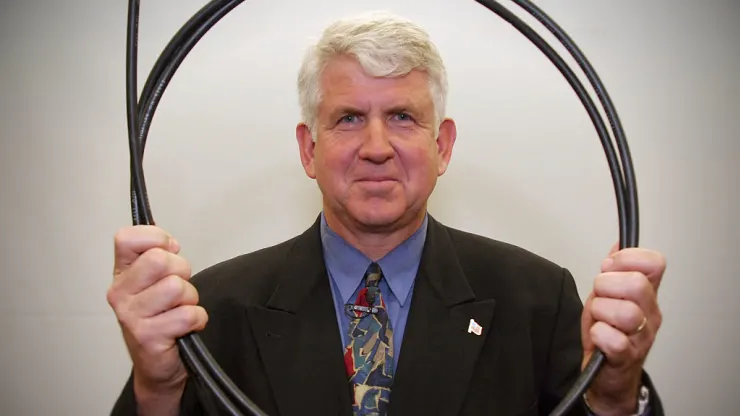

In the space of 50 years, the Ethernet standard has spread around the world and become dominant in networks of all kinds. Thus, it was precisely because of this invention, standardization and commercialization of Ethernet that, on March 22, 2023, Metcalfe was announced as the winner of the 2022 Turing Award. This prize is considered the Nobel of computing and is awarded annually by the Association for Computer Machinery, the world’s largest scientific and educational computing society. Nowadays, no one thinks of installing a network that isn’t Ethernet. However, it wasn’t always like this: several other patterns competed until it became dominant.

The need to create networks appeared very early on in computing environments, both to interconnect equipment and to exchange information. However, it was the Cold War between the United States and the Soviet Union that led the US government to develop and deploy the first data communications network in 1969. This project was carried out by the Defense Department’s Advanced Research Projects Agency (ARPA). The network developed by the agency soon became known as ARPANET. Its function was to provide data communication between US government computers, in case the United States suffered a nuclear attack.

The internet began with the connection of four computers

The ARPANET was considered a revolution in data communication. This happened not only because it adopted a protocol that would evolve into TCP/IP, but mainly because it used packet-switched communication intensively and over long distances for the first time. Despite the need to connect government computers, the first connections were experimental and made between computers at four universities. The first was a Scientific Data Systems model Sigma 7 computer, running the SEX operating system, at the University of California at Los Angeles.

The second was a Scientific Data Systems 940, with the Genie operating system, at the Stanford Research Institute in Menlo Park, also in California. The third was an IBM 360/75 running OS/MVT, from the University of California, Santa Barbara. Finally, the fourth was a Digital PDP-10, running the Tenex operating system, which was at the University of Utah.

In a packet switching system, the data to be transmitted is gathered into sequences with a certain number of bits, the first and last of which have predefined functions for the transit of the packet (delimitation, source MAC address and destination MAC address, for example). It is with this structure that the information will travel through the network to reach its designated destination. This first network, with just four computers, was connected via telephone lines, but it gradually grew. By 1972, it already had 40 machines connected. With its growth and evolution, it was precisely this network that later became the Internet.

A competition for connectivity standards

When Metcalfe invented Ethernet, other network technologies and architectures were already emerging. However, experts say that only two stand out: ARCNET (Attached Resource Computer NETwork) and Token Ring.

ARCNET operated on a protocol for local networks launched in 1977 by the US company Datapoint Corporation. It was the first networking system for microcomputers, and it gained market share in the 1980s because it accepted devices of any brand, while many of the other standards only accepted devices of a single brand. ARCNET’s speed was limited to 2.5 Mbit/s and, although it was popular, it was considered less reliable and less flexible than Ethernet.

The other notable network standard at the time was IBM’s Token Ring. In the 1980s, it was marketed vigorously by the company. This architecture connects devices in a ring or star format and avoids packet collisions by ensuring that only one host – the one with a certain token – can send data. This means that the other tokens will only be released to access the network when their receipt has been confirmed by the previous recipient.

Launched in October 1985, IBM’s Token Ring technology initially operated at a rate of 4 Mbit/s. For this reason, many experts argued that it was better than Ethernet. However, Ethernet provided more economical solutions for networks, which made it popular to the detriment of Token Ring, whose standard still exists, but without much commercial expression. This evolution of networks over time was fundamental to the development of cloud computing and other technologies, enabling more efficient and flexible connections for data transmission on a global scale.

Optical fiber reaches networks

Another notable standard that emerged in the early 1990s was FDDI, short for Fiber Distributed Data Interface. This standard uses fiber optics, and right from its launch it became attractive because it already operated at 100 Mbit/s, and also had the advantage of operating over distances of up to 200 kilometers. At the time, Ethernet only reached 10 Mbit/s. In 1998, however, the launch of Gigabit Ethernet, which was faster and cheaper, wiped out the advantage that FDDI still had.

All these developments in Ethernet have made it the fundamental technology behind the Internet itself, a network that today has 4.9 billion users and is part of our daily lives. In awarding Metcalfe the Turing Award, the Association for Computer Machinery noted that today there are around seven billion Ethernet ports on the planet. The figures therefore leave no doubt that the award was more than deserved.

This content was produced by Huge Networks. Our company protects your corporate network, accelerates cloud applications, mitigates DDoS attacks and keeps cyber threats at bay. Subscribe to our newsletter and stay up to date with the latest news on security and digital infrastructure!